Correspondence Analysis of Square Tables

Square tables are data tables where the rows and columns have the same labels, commonly seen as a crosstab of brand switching or brand repertoire data. Correspondence analysis is often used to visualize these tables as a much simpler chart. In this post I discuss the special case of square tables, using examples of cereal brand-switching, and switching between professions.

As background, this earlier post describes what correspondence analysis is. This post describes how correspondence analysis works and how to interpret the results.

Correspondence Analysis of Square Tables

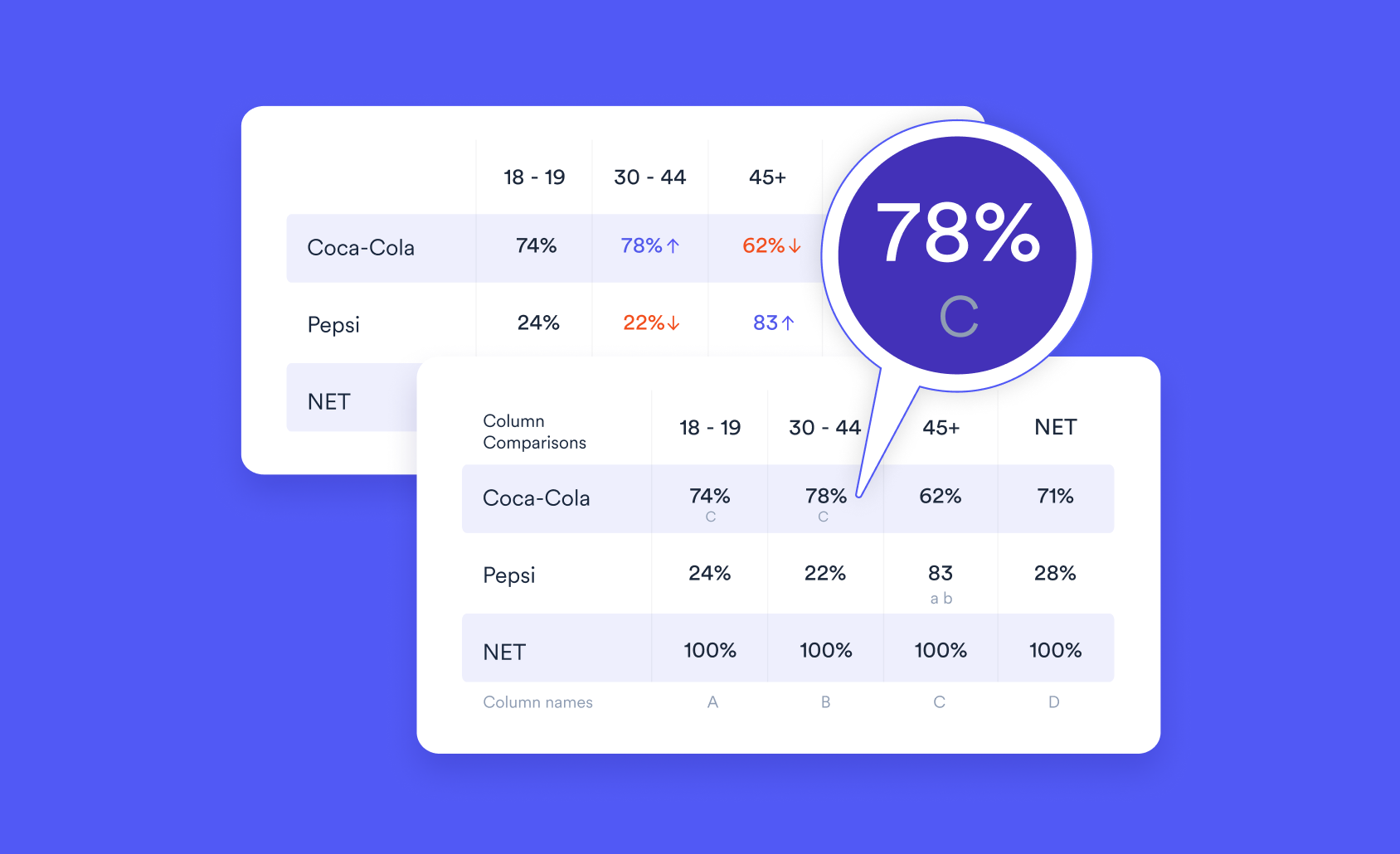

A typical table used for correspondence analysis shows the responses to one question along the rows and responses to another question along the columns. Each question has its own set of mutually exclusive categorical responses. The cell at the intersection of any row and column contains the count of cases with that combination of row and column responses. I say "typical table" above because there are other use cases, such as tables of time series, raw data, and means, all of which are described in this post which describes when to use correspondence analysis.

In general, the sets of responses labeling the rows and columns are different. For example, the rows may be labeled by each respondent's favorite color and the columns by their favorite sport. If instead, we labeled the columns by their partner's favorite color, then we have an example of a square table.

A square table, in this case, does not just have the same number of rows as columns. The rows and columns have identical labels, and they are presented in the same order. Such tables may also be called switching matrices, transition tables or confusion matrices.

Below I show an example as a heatmap for easier visualization. The data relate to brand switching between breakfast cereals. The rows contain the first cereal purchased, the columns contain the next cereal purchased.

Data symmetry

Before delving into the correspondence analysis, let's take a look at the data above. One of the first observations that we can make about it is the strong diagonal. On the whole, people tend to buy the same cereal repeatedly.

Looking away from the diagonal, there is also high symmetry. For example, the numbers switching from Cornflakes to Rice Krispies (80) is almost the same as switching in the other direction (81). Both of these observations are quite typical of square tables from consumer data.

Now let's perform the correspondence analysis. The scatterplot below shows the first 2 output dimensions.

Interpretation of Square Correspondence Analysis

It's tempting to draw immediate conclusions from the plot above. Before we do so, we need to take note of a few things.

First, any square matrix can be broken down into symmetric and skew-symmetric components. The correspondence analysis of those two components is driven by different aspects of the data, and they are best analyzed separately.

- The symmetric component shows us how much 2-way exchange occurs between categories. Points that are close together have a relatively high rate of exchange between them.

- The skew-symmetric component determines the net flow into or out of a category. Points that are close together have similar net flows with the other categories.

We can tell which dimensions are symmetric and which are skew-symmetric by inspecting the how much variance each dimension explains. The symmetric component produces dimensions that each explain a different amount of the variation in the table. In more technical language the eigenvalues, inertias or canonical correlations are unique. Correspondence analysis of the skew-symmetric component produces dimensions that occur in pairs. Both dimensions within a pair explain the same amount of variation.

Second, always take note of the amount of variance explained by each dimension. When the total explained by both dimensions of a chart is much less than 100%, the unseen dimensions contain a significant amount of information.

Third, points further from the origin are more strongly differentiated. Conversely, points that are close to the origin are less distinct and may not be similar (other than their mutual lack of distinction!).

Finally, this post covers interpretation of correspondence analysis in much more detail.

Cereal interpretation

To understand the cereal correspondence analysis, let's look at the variance explained by each dimension,

We see that dimensions 1 to 6 has unique amounts of variance explained, so they are symmetric. Taking a closer look at the raw output below, dimensions 7 to 12 occur in pairs, so they are a result of the skew-symmetric component.

Since the earlier scatter plot showed the first two dimensions, we can now say that they are symmetric. This means that there is relatively little switching to or from Shredded Wheat. Frosties and Crunchy Nut Cornflakes form a pair, indicating a relatively high level of switching between those brands. The other 4 brands also form a loose group of mutual interchange. However, these two dimensions only account for 62% of the variance, so they do not tell us everything about the data.

The fact that the first 6 dimensions result from the symmetric component confirms our earlier observation about the symmetry of the data. In fact, 99.5% of the variance is due to symmetry. It is not unusual that the symmetric component is dominant. In this case, it would be unwise to plot the skew-symmetric dimensions since they represent such a tiny part of the information. I would also never plot a symmetric and skew-symmetric dimension on the same chart.

Less Symmetric Data

As a second example, I am using data about how people transition between jobs. This is a somewhat mature data set, referring to German politicians in the 1840s. You will not find software engineer listed. The rows of the table tell us the professions held by the politicians prior to their terms, and the columns tell us the professions that they held after they left office.

The plot below shows the first two symmetric dimensions.

From this, we conclude that there is a relatively high exchange between Justice, Administration, and Lawyer. There is also a high exchange between Education and Self-employed.

The skew-symmetric component is 15% of the variance, which is much more than for the cereal data but still a small part of the whole. On the chart below we see that Lawyer and Justice are at the extremities. This means that those professions experience a relatively high net inflow and outflow.

We cannot say from the chart which has the inflow and which has the outflow. The only way to tell is to look at the raw data. To clarify this point, we can compute the net inflow for each professional by working out the difference between the row totals and column totals for each profession in the original table. The final column chart shows us that Lawyer has the inflow, and Justice has the outflow.

Conclusion

One key advantage of using correspondence analysis specifically for a square table is that we do not need to plot row and columns labels separately. This means that we can interpret the closeness of points on the same scale. However, as with all correspondence analysis, we need to take care to draw correct conclusions. In particular, the symmetric and skew-symmetric components should be analyzed independently. The symmetric parts tell us about the exchange between different categories, why the skew-symmetric parts tell us about net flows into or out of categories.

TRY IT OUT

All the analysis in this post was conducted Displayr. You can to review the underlying code or run your own analysis by clicking through to this brand switching example. The flipDimensionReduction package (available on GitHub) was used, which itself uses the ca package for correspondence analysis. Check out Q for fast advanced survey analysis and crosstab software.

The cereal data is from Dawes, John. (2007). "The Structure of Switching: An Examination of Market Structure Across Brands and Brand Variants." The historical German politician data is from Greenacre, Michael. "Correspondence Analysis of Square Asymmetric Matrices." Journal of the Royal Statistical Society. Series C (Applied Statistics) 49, no. 3 (2000): 297-310.