A/B testing involves comparing two different approaches to solving a problem – approach A and approach B – and working out which is better based on data. A/B testing is also known as randomized testing, two-sample testing, split testing, and bucket testing.

There are two key aspects to an A/B test. The first is that people must be randomly assigned to one of two groups, with one group shown the A approach and the other group, the B approach. The second aspect is that statistical testing is used work out whether A, B, or neither is better.

Example of A/B testing

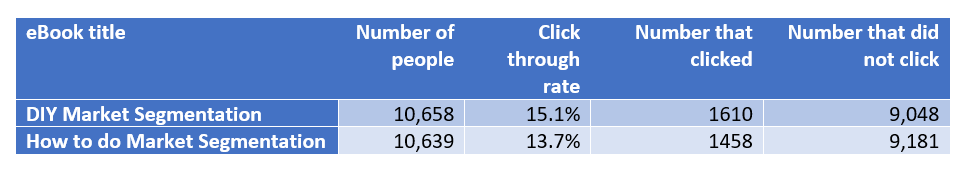

Here is an example of how we did an A/B test. Our goal was to work out whether it was better to describe our eBooks as "DIY" or "How-to". 10,658 people in our database were sent an email with a link to a DIY Market Segmentation eBook becoming test case A. Another 10,639 were sent an email with a link to a How to do Market Segmentation eBook, becoming test case B. We got the following results:

The difference in the click through rate is only 1.4%, so we conducted a statistical test (Bayesian binomial test of proportions) to rule out the possibility that the difference is not due to a sampling error (i.e., chance). The test shows that there is a 99.75% chance that the DIY title is superior to the How-to title. That is close enough to certainty for us to use DIY going forward. In case you were curious, you can check out DIY Market Segmentation!

Requirements for an effective A/B test

A/B testing requires:

- The comparison of two treatments or approaches. You can do more than two, but then you are doing multivariate testing.

- A clear hypothesis about the difference between the two treatments. At the end of the A/B test you want to be able to say not only which option won but also understand why it won. This usually means that you should design an A/B test with treatments that are pretty similar, making it relatively easy to isolate which part of the treatment led to conversion. For example, you may just test the color of a button.

- You also need a relatively large number of people to expose to each of the treatments. How many people depends on how big the difference is likely to be between the effects of the treatments. If testing minor differences in wording or colors, you usually need thousands of people. If you expect big differences, such as 20% differences between the A and the B, then samples with as few as 50 in each group can be OK.

- A metric for comparing the two treatments. In the example above the metric was the click- through rate. There are many other possible metrics, such as including average dollars spent, and percentage that signed up.

- A randomization mechanism for assigning people to each of the treatments. Random means that software, rather than people, used a random number generator to allocate people to each group.

- No ability for human intervention to ruin the test. For example, A/B testing involving salespeople testing different scripts often fails because if a person does not like their sales script, they tend to modify it or make a half-hearted attempt.

- Statistical testing software to work out the strength of evidence in favor of A versus B. The smaller the sample size, the more important it is to use statistical testing software, because as a rule, most people over-interpret differences, thinking that A or B has tested better, when there is no evidence one way or another.

Why companies use A/B testing

The basic logic of A/B testing is to use scientific principles to improve business processes. People typically use A/B testing to achieve two outcomes. One is to settle debates using data, rather than arguments. The second is to build on and continually add to knowledge about what does work and why, rather than reinventing the wheel.

A/B Testing and SEO

When using A/B testing to optimize a website there is a chance that the A/B testing can damage SEO, for example, by showing the search engines a different web page from the one ordinary visitors see, failing to use the HMTL rel="canonical" attribute to point variations back to the original version of the web page, using 302 permanent redirects, and forgetting to turn off A/B tests.

Ready to find out more about different terminology? Check out our What is series!